top of page

Navigest

An in-vehicle multi-gesture control system for navigation that reduces visual distractions caused by screen use while driving

Type

Solo Project

Timeline

Aug - Nov, 2022

Tool Used

Figma, OpenAI

Overview

Driving safety is the fundamental guarantee of the mobility solution, and driving distraction will remain an issue until Level 5 automated driving becomes widely available. Navigest is a system I designed that boasts its improvement of driving safety by primarily leveraging micro-gesture control around the steering wheel to reduce vision and operation distractions.

Background

Using the navigation system can easily cause driver distraction, and research shows that distraction is one of the biggest causes of car accidents, as research shows that 80% of crashes have been caused by drivers’ inattention. (Source)

Design Process

Define Problem

Research

Analyze

Design

User Testing

Delivery

Initial Thinking

Possible Issues

-

The distraction caused by the process of human-car interaction

Research Methods

-

Literature review

-

Quantitative research (survey)

-

Qualitative research (in-person interviews)

Research Insights

01

Easily causing cognitive load when using the touchscreen navigation while driving

When two tasks share the same modality (in this case, eye attention), the more difficult a task is in one modality(eyes on the road), the worse it will perform in the other modality(using the touchscreen) because of capacity constraints. (Source)

02

42% of participants feel dissatisfied with the touchscreen navigation system

A survey of 76 participants and six in-depth interviews revealed that for users, a touchscreen navigation system means "hard to use while driving", "time-taking to locate the position of the function."

“It is intuitive since a touchscreen console functions similarly to your mobile phone, but it is also complicated and less straightforward than the physical buttons in the car.“

——— Participant

Problem

Problems

01

Touchscreen input makes it difficult to achieve blind operation, and in most cases, drivers’ eyes and hands must be engaged simultaneously;

02

The angle between the center screen and the driver's natural line of sight is wide, and the driver must shift a wide range of line of sight to read the information on the screen.

"How might we reduce information interference in the process of human-vehicle interaction?"

Opportunity

Opportunity Areas

In-Car Interactive Methods Comparison

Physical Button

-

Allows blindly operating the system

-

Leverages the sensation of tactile

-

Do not please the eyes in nowadays aesthetics

-

Increases the probability of defective products

Touchscreen

-

Had familiarity with using touchscreens

-

Costs less in manufacturing (source)

-

Causes visual distraction: land deviation (Source)

-

Causes manual distractions: hands off the wheel

-

Offers low feedback force

Voice Control

-

Lowers lane deviation

-

Requires no hand movement

-

Lack of universal standards for commands

-

Demands quiet environment, so is hard to use under a noisy multi-person environment

Gesture Control

-

Shortens interaction distance

-

Reduces sight deviation

-

Requires memory cost of the gestures

-

Has low tolerance of wrong gestures (results in frustration)

Highlights

Challenges

Studies have demonstrated that gesture control has rich investment value as it conforms to immersive interaction

One of the mobility trends of the future is autonomous vehicles (AV), and space in the car will be re-defined. Feedback will move from 2D screens on phones and cars to a more immersive interaction. (Source)

Efficiency of the average length of time on a secondary task

Efficiency of the average number of times on a secondary task

Design

Concept Prototype #1

Air Gesture Control in Navigation

My first set of gestures is a design scheme presented by air gestures. However, even after three iterations with three rounds of testing, the participants’ reaction was not ideal.

Lo-Fi Prototype Iteration #1

Lo-Fi Prototype Iteration #2

User Testing Insights

01

Users feel unconfirmed and insecure when using the air gesture control

When drivers were using air gestures, their hands had no interaction with objects, which made them feel unsure of their operation and insecure.

02

The air gesture control has low feedback force, requires even more visual attention

Most feedback was based on on-screen information, with a few via audio or other means; frequent center screen checks to see the echo feedback was necessitated, which caused visual interference.

03

Hand off the wheel when using the air gesture control

Although the interaction distance was shortened, air gestures required the drivers’ hands off the wheel, increasing the potential risk and driving distraction.

Concept Prototype #2

Micro-Gesture Control in Navigation

More research led to the discovery of micro-gesture control. As opposed to large-scale hand movement in air gestures, focuses on the interaction between the fingers and the steering wheel.

Air Gesture Control

X

Gave unsure and insecure senses

Micro-Gesture Control

√

Provides direct interaction with the steering wheel

X

Had low feedback force, required eye attention

√

Enables tactile feedback, gives instant feedback from the steering wheel

X

Required hand off the Wheel

√

Allows hand on the Wheel all the time

Design of the Micro-Gesture

Through the literature review, I avoided using certain gestures that experiments have proven to be difficult to perform and costly to remember. (Source)

Prevention from Mistakenly Triggering the Gesture: Double Verification

Observational research: common hand behavior on the wheel

I filmed ten-minute videos of each 12 participants while they drive with their hands holding onto the steering wheel. This research allowed me to observe common behaviors while driving.

Input

A successful command =

detection from gesture recognition sensor + tapping sensor

Output

A successful feedback =

instance haptic feedback + system output

User Testing Design

Designing micro-gesture contains two main difficulties: performance difficulty and cost of memory. Stage one and two tested specific usability of the gesture, and stage three tested overall usability. Each round had 5-7 participants. Participants were aged between 21 and 37 and all had US driver's licenses.

01

Performing Difficulties

Tapping V.S. Swiping

confirmed and solved the problem of gesture-performing difficulties.

02

Learning Cost

Minimizing the memory cost was always the most difficult task for micro-gesture.

03

Validation

Participants were able to evaluate the gestures while driving in a simulated environment.

User Testing #1: Performing Difficulties

Testing Two Forms of Gesture

Gesture swiping in the air simulated the behavior on the touch screen while tapping on the steering wheel simulated a more natural state of interaction between hands and objects.

Testing Procedures

The Wizard of Oz Testing

Swiping in the air

Tapping on the wheel

-

5 mins learning the gestures

Participants could to ask any question.

-

“Show me the gesture” Question

I performed gestures, and participants answered the corresponding name of the gestures.

-

“Tell me the function” Question

I named a gesture, and the participants performed the corresponding gesture to me.

-

Feedback about the gestures

Insights

01

Although tapping gestures are easier to perform physically, they are difficult to remember because they are different from the usual gestural conventions of other electronic devices.

02

Swipe gestures were more in line with what they were used to using touch screens, but they found them uncomfortable to execute. If they perform the gesture correctly and the command doesn't go through, they get frustrated and are less willing to use it again.

Iteration: Remove All Swiping Gestures

Tapping gestures are easier for users to perform, I changed all the swiping gestures to tapping gestures, and my next challenge is to make them easy to remember.

Two Ways to Reduce the Learning Cost

Associative learning in micro-gesture

Leveraging gesture's similarity in shape or symbol meaning and user habit of on-screen gestures.

Gesture Menu

Utilized gestures in AR/VR that has a wide range of applications.

User Testing #2: Learning Cost

Stage two used the same testing procedure as well as the Wizard of Oz testing methods.

Insights

01

Users needed to be clearly informed the association between the gesture itself and its similar symbols/gestures so that they can remember it faster and more accurately.

User Testing #3: Validation

Using open source api: Mediapipe, openCV, keyboard to code a gesture recognition program. After configuring the hardware and software used in the test, users performed two rounds of driving tasks with the same route (including turns, lane changes, and intersections) and the same operation (zoom in, zoom out, confirm, and add a stop) on a closed roadway.

TASK

ONE

Touchscreen navigation(T)

TASK

TWO

5min gesture learning

TASK

THREE

Micro-gesture navigation(G)

TASK

FOUR

Review of the experience

Testing Environment Setup

Gesture Capturing

Data From the Testing

Insights

01

Although image-based gesture recognition technology had limited capability in terms of accuracy and latency, still micro-gesture had already outperformed touchscreen in Zoom in, Zoom out, and Confirm.

02

Micro-gestures reduced the burden for the participants after they became familiar with it, although there was a slight initial hands-on learning cost

03

Some commands often appeared in combinations. E.g., after zooming out on a map, users panned around to view additional information. Gesture combinations need to be considered in the next iteration.

Final Design: Practical Use of Navigest

Ariel is invited to a friend's house for a party

She isn’t sure exactly how to get there, so she decides to drive off first while waiting for her friend to send over the exact address.

Scenario Zero

Gesture Positioning

When Ariel starts the car, the gesture recognition system is activated and scans Ariel's hands, locating the position of each finger and confirming the default status.

Voice Assitant: "Ariel sent you a message...Should I set the address as the destination?"

Scenario One

Confirm the Address and Navigate

Her friend sends the address over, and the voice assistant asks if the address should be set as the destination. Ariel confirms the address with a confirmation gesture and starts the navigation.

Scenario Two

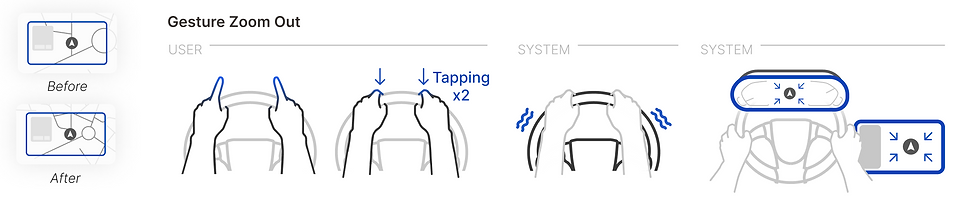

Zoom In / Zoom Out to Browse the Map

Ariel wants to view the next few blocks, so she uses the zoom in and the zoom out gesture to browse the map.

Scenario Three

Center the Map

Ariel wants to view the next few blocks, so she uses the zoom in and the zoom out gesture to browse the map.

Scenario Four

Preview Route

Ariel wants to check the current road conditions and is concerned that traffic would interfere with her plans, so she enters the gesture menu and uses the "preview route" command.

Scenario Five

Add a Stop

She notices that there is no traffic on the road and decides to stop at a local restaurant to grab some food before heading to her friend's place.

Scenario Six

Cancel Adding the Stop

Ariel remembers that her friend has prepared dinner at her house, so she cancels adding the stop using the “cancel” gesture.

Scenario Seven

Mute the Navigation Prompt

Ariel feels the navigation prompt was too loud and wants to disable it.

Future

-

The combined usage of commands (e.g. pan around the map after zooming in);

-

Tracking loss occurred. This project used image-based gesture recognition. In the future, radar or ultrasonic gesture recognition can be considered as they are more accurate.

Reflection

-

Aware of the complexities involved in building human-car interactions. Designers should always take into account extreme circumstances that may arise.

-

In contrast to the current touchscreen-dominated market, there may be a "dynamic interaction" market in the future.

-

I don't become frustrated even if my designs aren't the perfect idea, because I believe that success must be preceded by many failures and that I am just one of the people who participated in the attempt.

bottom of page